Due to technological advancements, such as text-to-speech, natural language processing (NPL), and machine learning, new forms of interfaces are emerging. Enterprises such as Google, Amazon, and Microsoft have been investing in the field of conversational user interfaces (CUI). How we could take advantage of this technology and create a voice agent that supports learning new skills while delivering an engaging user experience?

Why a cooking assistant

CUIs offer great advantages in some areas. For instance, the hands-free interface makes it quite interesting to teach cooking skills as users have their hands busy whilst doing cooking activities. This field is relatively new, and the recent studies in this area help to understand how to create better collaboration between computer agents and humans.

My role:

- Conducted role-plays and the Wizard of Oz method;

- Interviewed a chef de parties;

- Created and iterated dialogue trees;

- Defined utterances, system answers, and fallbacks;

- Assessed all the dialogue trees and proposed changes based on technical feasibility;

- Planned entities, intents, follow-ups and other technical dependencies;

- Prototyped and did initial tests on Dialogflow.

Tools used:

- Miro;

- DialogFlow;

- Brainstorming;

- Affinity diagram;

- JavaScript;

- AfterEffects;

- Adobe Premiere.

Methodology

Research & Data Analysis

The initial research was based on literature reviews. Academic papers were studied to understand the state of the art of this technology, the advantages of such an interface, and the disadvantages. One of the biggest challenges of CUI is clear communication, using only voice, in a world dominated by visual interfaces. To understand more about this challenge, interviews were made to analyse how people communicate instruction verbally e.g., the words they use, the conversation flow, etc.

After research and discussions about different skills, the final decision was to create a voice agent that coaches pasta-related skills: how to make pasta dough, shape it, boil it and make the sauce.

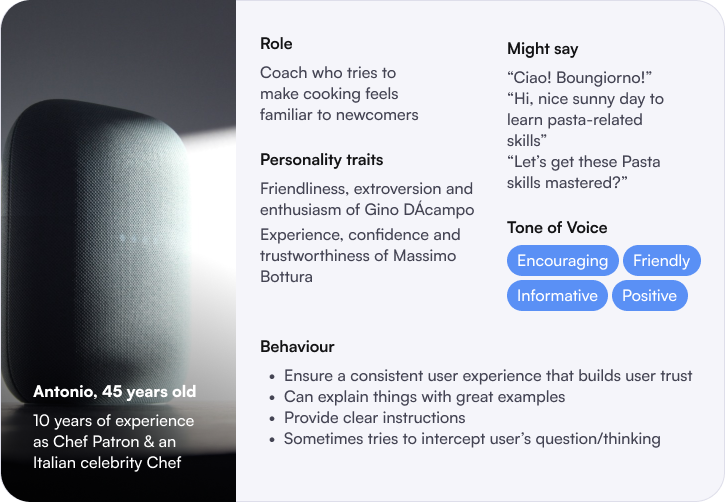

Agent personality

Following that, personas with the agent personality were defined (name, behaviour, short vita etc.). These are some characteristics pointed out before the ideation phase. They were based on the initial research, brainstorming, and interviews:

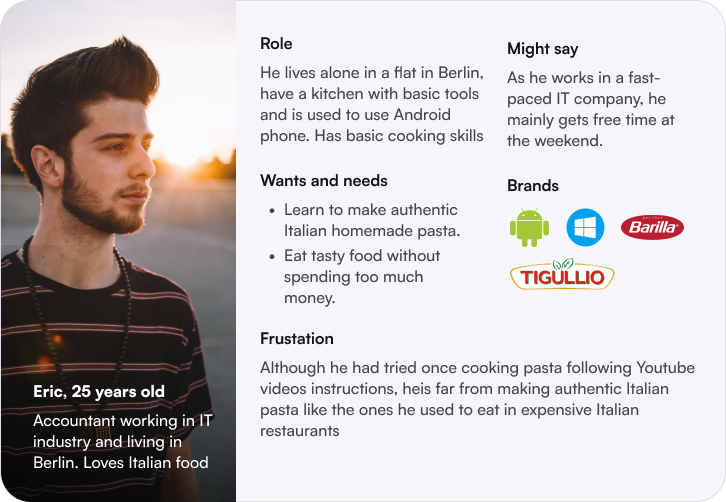

User persona

Based on the personas, some of the agent functionalities were listed to fulfill the needs of the target users. They guided the idea generation phase, technology assessment, and prototype creation.

- Say some Italian words;

- Be supportive, positive, and friendly;

- Be trustful and have a warm tone of voice;

- Provide alternatives and dynamic flows based on user commands;

- Be able to clarify some user questions without being repetitive;

- Communicate measurements accurately;

- Check the availability of ingredients before giving instructions;

- Intercept thinking whenever possible;

- Explain technical terms related to cooking for novices.

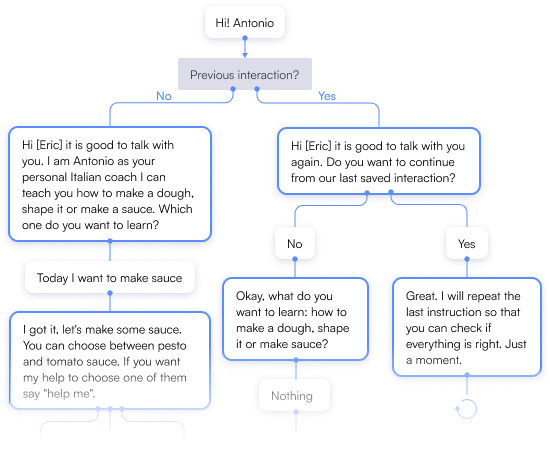

Ideas generation and prototypes

As aforementioned, the ideation phase was a stage full of tree-based diagrams iterated based on role-playing and the Wizard of Oz methods. During each iteration, a considerable number of suggestions were related to how to modify the tree to have a more fluid interaction.

Some of the learnings from this phase include:

- Make the agent more friendly by calling the user by their name;

- Save user preferences to avoid long and repetitive flows;

- Provide the users with a way to save and resume different stages to avoid making them repeat the same steps;

- Provide helpful suggestions when the user runs out of some ingredients or tools;

- Limit the servings based on the user's expertise;

- Provide extra instructions about how to evaluate the results. So that, users can check themselves if they successfully finished it or if they need additional help;

- Identify missing steps and additional fallbacks.

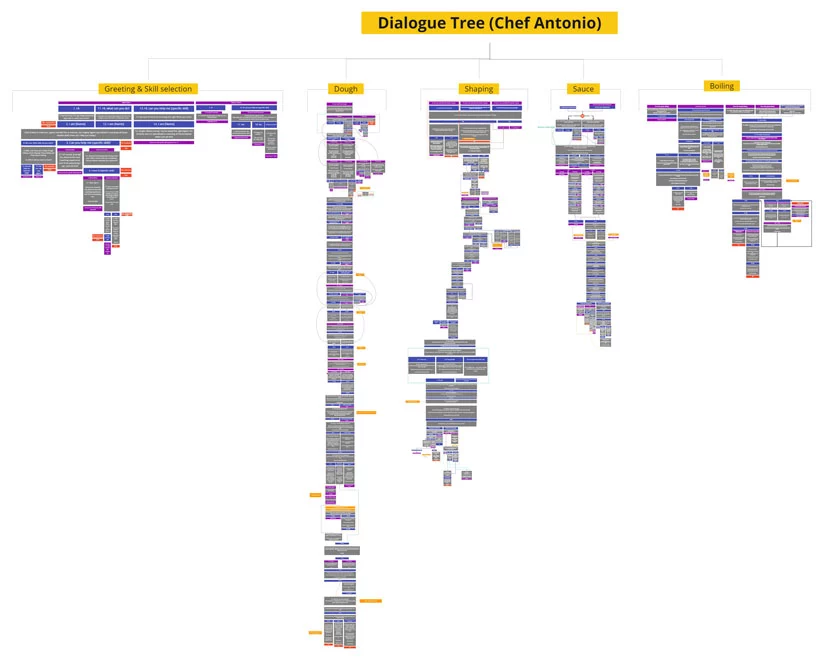

Below is an overview of the final dialogue tree with all skills:

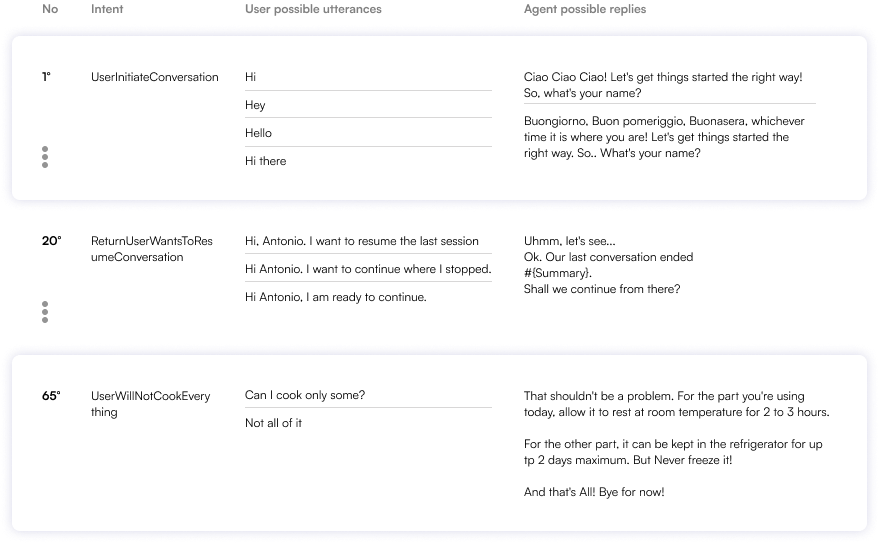

With the conversation flow defined, the next step was to create a table of possible utterances and answers (see an example below).

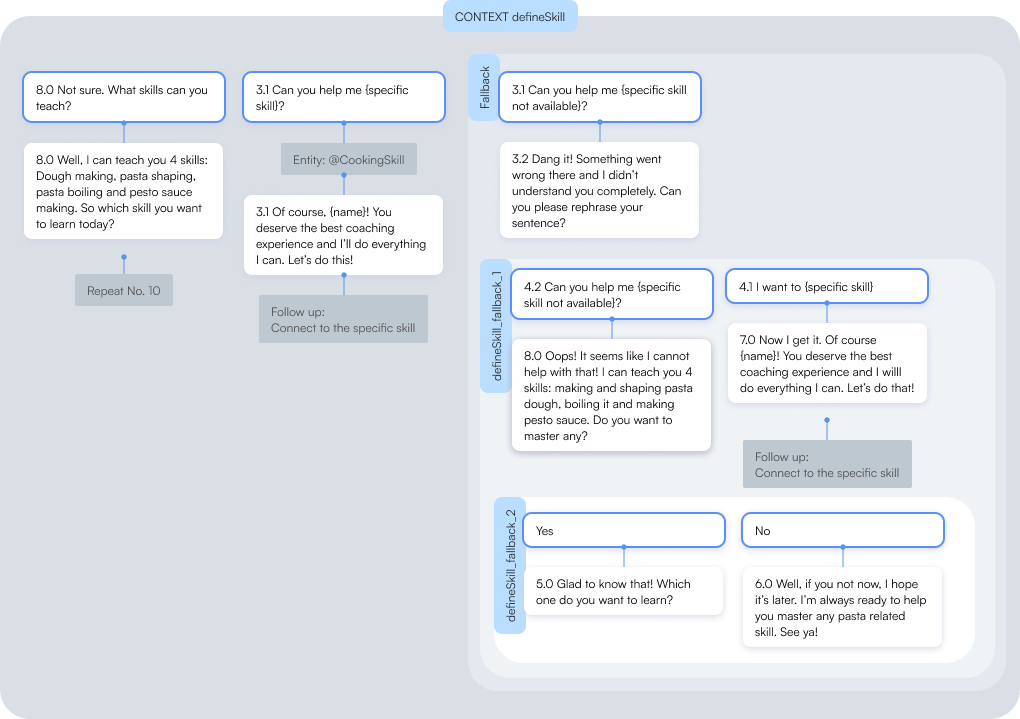

Before prototyping the system in DialogFlow, intents, entities, follow-ups, events, and implicit invocations (also known as deep-link) were planned.

In the video below, you can view a short demonstration of the system using the Dialogflow simulator:

Some final characteristics:

- Speech Synthesis Markup Language (SSML) was added to make the voice agent sound more natural and expressive by changing voice intonation, and speed and adding extra pauses.

- The user can activate the agent by saying different utterances, e.g., “I have the rolling pin”, “I have all ingredients to make the sauce”.

- A significant number of training phrases were added to make the system activate the right intent.

- Although Dialogflow does not offer the option to use multiple languages within one agent, some workarounds were made to make some Italian words sound more “Italian” when pronounced by an English voice synthesiser, for instance, “Buongiorno” became “Buwonjorrrno”.

- The agent tracks user progress and uses this data to customize the experience of different skills.

- The ingredients measurements are informed based on the user's preferred unit and servings (they are defined on the first time the user interacts with the system).

- The agent can play Italian music while the user completes some long-time tasks (2 to 5 minutes).

Results

After more than 200 intent, 1.000 lines of Javascript fulfilment code, tests (on the simulator and Android phones), and bug fixes, the prototype was finalized. See below a short video showing the main features implemented:

Chef Antonio is a conversational agent aimed at coaching users on how to master pasta-related skills. Its ability to play Italian music, customize the experience and say some Italian words creates a warm and Italian-like atmosphere.

Team: Atie Daee | Hala ElShawa | Lucas Andrade da Costa | Md Shakhawat Hossain |Sourav Bhattacharjee

Image crédits:

https://unsplash.com/

kisspng